space cadet

SENIOR MEMBER

An Air Force Pilot Will Battle AI In A Virtual F-16 Dogfight Next Week. You Can Watch It Live.

Mark your calendar, place your bets, get out the popcorn.

www.forbes.com

www.forbes.com

An Air Force Pilot Will Battle AI In A Virtual F-16 Dogfight Next Week. You Can Watch It Live.

Mark your calendar, place your bets, get out the popcorn. An Air Force F-16 pilot will take on an artificial intelligence algorithm in a simulated dogfight next Thursday. And you can watch it live.

The Defense Advanced Research Projects Agency is running the program, which is called the AlphaDogfight Trials. It’s part of DARPA’s Air Combat Evolution (ACE) program.

The action will kick off Tuesday with AI vs. AI dogfights, featuring eight teams that developed algorithms to control a simulated F-16, leading to a round robin tournament that will select one to face off against a human pilot Thursday between 1:30 and 3:30 p.m. EDT. You can register to watch the action online. DARPA adds that a “multi-view format will afford viewers comprehensive perspectives of the dogfights in real-time and feature experts and guests from the Control Zone, akin to a TV sports commentary desk.”

With remarks from officials including USAF Colonel Daniel “Animal” Javorsek, head of the ACE program, recaps of previous rounds of the Trials, scores and live commentary, it’ll be just like Sunday Night Football — but on Thursday afternoon.

DARPA says ACE is about building trust, particularly among U.S. fighter pilots, in artificial intelligence as the Pentagon seeks to develop unmanned systems that will fly and fight alongside them.

“We envision a future in which AI handles the split-second maneuvering during within-visual-range dogfights, keeping pilots safer and more effective as they orchestrate large numbers of unmanned systems into a web of overwhelming combat effects,” Col. Javorsek said in a 2019 press release.

An F-16 from the 20th Fighter Wing at Shaw AFB, S.C. DVIDSHUB.NET

Eight teams were selected in 2019 to compete in the trials: Boeing BA -0.4%-owned Aurora Flight Sciences, EpiSys Science, Georgia Tech Research Institute, Heron Systems, Lockheed Martin LMT -0.8%, Perspecta Labs, PhysicsAI and SoarTech.

On day one of the competition, the teams will fly their respective algorithms against five AI systems developed by the Johns Hopkins Applied Physics Lab.

The industry/academia teams will then face off against each other in a round-robin tournament on the second day. The third day will see the top four teams competing in a single-elimination tournament for the championship. The winner will then fly against a human pilot in the marquee engagement at Johns Hopkins’ APL.

The point of it all, as Col. Javorsek noted, is to build trust in AI in the life-and-death environment of aerial combat. Trust requires sharing — in this case the kind of information that American fighter pilots will likely demand. With that in mind, I asked DARPA for an interview with the F-16 pilot who will go up against all that math.

That request was denied on the grounds of “operational security.” Col. Javorsek declined to elaborate on the particulars of the dogfight scenario but assured me that careful consideration has been placed on the simulated environment to ensure an engagement in which skill alone will determine the outcome.

An F-16 aggressor pilot in a 64th Aggressor Squadron F-16 at Nellis Air Force Base, Nevada.

One can reasonably ask what operational security concerns would prevent divulging the identity of the pilot, his or her experience and the basic parameters of the fight?

America’s adversaries and allies would certainly understand the kind of operationally relevant one-on-one air combat maneuvering engagement that AlphaDogfight involves. Given the known quantity that is the F-16 weapons system and years of intelligence on American fighter doctrine and tactics, they’d surely comprehend it on a granular level.

So would American fighter pilots. The first question they’ll likely ask is, “Who’s the pilot in the simulator seat?” We do know from queries to the Air Force’s Air Combat Command and to Col. Javorsek that the pilot in question is an Air National Guard F-16 pilot from the local area.

Presuming that means the Baltimore-Washington, D.C. area, the pilot may be with the 113 Fighter Wing, 121st Fighter Squadron at Joint Base Andrews outside of D.C. The pilot is apparently a recent graduate of the F-16 Weapons Instructor Course and, as a Guardsman, likely a high-time Viper driver. Combat experience may or may not be on his resume.

DARPA hasn’t detailed the dogfight setup: what sensors, off-board sensors, weapons, ranges, fuel loads, G-limits, visual acuity, weather conditions, merge altitudes, command-control aid or rules-of-engagement the protagonists will go into the fight with. Nor do we know anything about the simulator’s capability/configuration/interaction.

Common sense suggests that if skill alone is to be the determining factor, the simulated aircraft should be the same with everything else equaled-out.

Turn On, Tune In

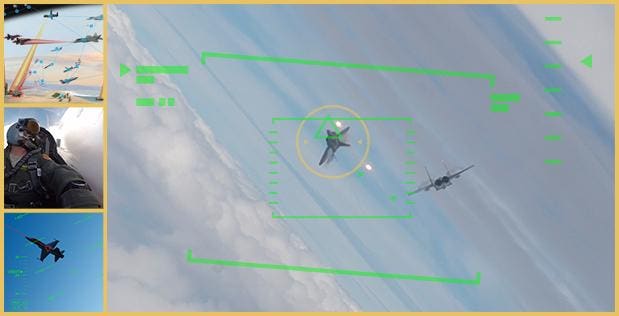

Colonel Javorsek said in an email that AlphaDogfight viewers will have access to some live data, things like relative closure and distance, throttle/stick/rudder positions via a heads-up-display like presentations on the “Dogfight” or “Pilot Point of View” channels.

The "Dogfight Channel" which AlphaDogfight viewers can select on DARPA's ADT TV Page.

DARPA

Nevertheless, we don’t really know what the human pilot or AI team is expecting. If such information isn’t forthcoming to a detailed degree, one would imagine that fighter pilots will not be overflowing with trust or confidence (though they may be entertained), regardless of who wins.

But DARPA sure hopes they’ll watch.

“We are still excited to see how the AI algorithms perform against each other as well as a Weapons School-trained human and hope that fighter pilots from across the Air Force, Navy, and Marine Corps, as well as military leaders and members of the AI tech community will register and watch online,” Col. Javorsek said in a DARPA statement. “It’s been amazing to see how far the teams have advanced AI for autonomous dogfighting in less than a year.”